Welcome to Transformer, your weekly briefing of what matters in AI. If you’ve been forwarded this email, click here to subscribe and receive future editions.

Happy new year! Not much has happened this week, so instead of a normal newsletter I thought it’d be useful to put together a roundup of important stories you might have missed while enjoying a well-deserved break these holidays.

OpenAI announced o3

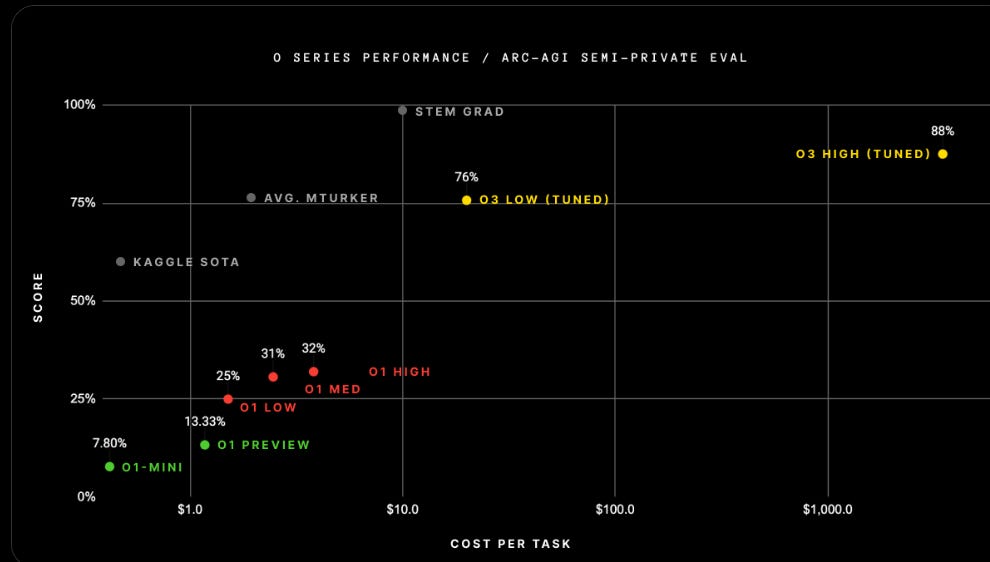

OpenAI closed out the year with a bang: the announcement of o3, a new reasoning model which performs very well on benchmarks. The model is not available publicly yet, but is available to safety testers.

Two results stood out: o3 achieves 75.7% on the ARC-AGI semi-private test set (87.5% for a high-compute configuration), way above o1’s performance; and 25% on FrontierMath, significantly beating the 2% previous models got as recently as November.

I’d caution against too much hype: this ain’t AGI, despite what some might tell you. While o3 is certainly very impressive and these benchmarks are hard, they’re not as hard as you might think.

But it’s certainly not nothing. Most people did not expect this much progress in this short a period of time, particularly one FrontierMath — where o3’s performance was so exceptional that Epoch is now creating yet another benchmark.

If you take away one thing, it should be this: AI progress is absolutely not hitting a wall.

For more on o3, Zvi Mowshowitz has a detailed overview.

GPT-5 seems to have issues

On the same day as o3’s announcement, the Wall Street Journal published a piece titled “The Next Great Leap in AI Is Behind Schedule and Crazy Expensive”. The unfortunate timing meant it was, of course, widely ridiculed.

But it’s an interesting piece:

OpenAI has conducted at least two large training runs [of GPT-5/Orion] … Each time, new problems arose and the software fell short of the results researchers were hoping for, people close to the project say.

At best, they say, Orion performs better than OpenAI’s current offerings, but hasn’t advanced enough to justify the enormous cost of keeping the new model running…

To make Orion smarter, OpenAI needs to make it larger. That means it needs even more data, but there isn’t enough…

OpenAI’s solution was to create data from scratch. It is hiring people to write fresh software code or solve math problems for Orion to learn from. The workers, some of whom are software engineers and mathematicians, also share explanations for their work with Orion.

The piece also reports that GPT-5/Orion is being trained on synthetic data from o1 (and presumably now o3 as well).

While the overall thrust of the piece was wrong — as I said above, AI progress isn’t slowing down — it is interesting to get further confirmation that the GPT architecture might be facing scaling challenges. Though of course, the new o-model line might mean that doesn’t matter much.

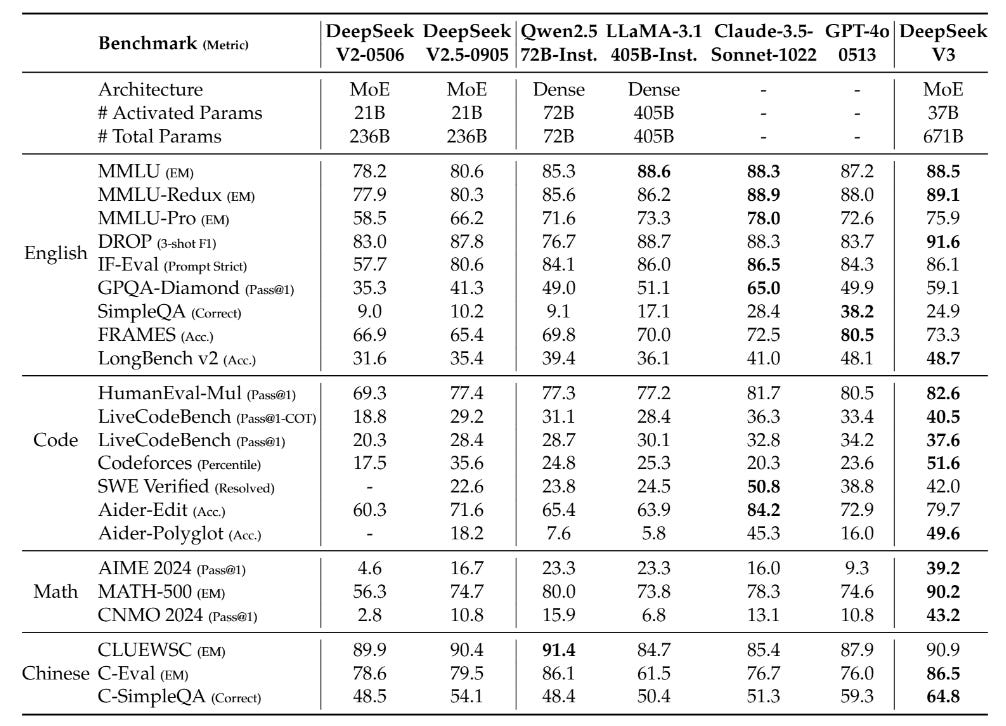

DeepSeek released the world’s best open-weight model

DeepSeek — the criminally underdiscussed Chinese AI developer — released DeepSeek v3, which comfortably beats Llama 3.1 as the best open-weight model and has some claim to parity with GPT-4o and Claude 3.5 Sonnet.

It is of course notable that a Chinese company has released something this close to the frontier (though the benchmark numbers might overstate its actual performance — Sonnet 3.5 still seems better in practice). Even more notable is the price tag: DeepSeek says it cost just $5.5 million to train, on a tiny cluster of 2,048 H800 GPUs (compare that to xAI’s 100,000 H100 cluster).

Some have pointed to the release as evidence that export controls are failing to constrain China’s AI progress. But consider the counterfactual: if DeepSeek can do this with a tiny cluster, what on earth would they do with a huge one?

Once again, Zvi Mowshowitz has a great overview of DeepSeek v3.

a16z’s Sriram Krishnan is Trump’s new AI policy advisor

Sriram Krishnan — until recently a partner at venture capital firm Andreessen Horowitz — was announced as Senior Policy Advisor for AI at the White House Office of Science and Technology Policy. Donald Trump said Krishnan will “[work] closely with David Sacks … and help shape and coordinate AI policy across government”.

The appointment is interesting, given a16z’s staunch opposition to AI regulation. Long-time Transformer readers will remember that a16z (and Krishnan) have a history of misrepresenting facts in their campaign against regulation.

I read his appointment as further evidence that the incoming administration won’t do much to regulate AI in the coming years.

(As a wild subplot, Krishnan’s appointment sparked a civil war among Trump supporters, with Krishnan’s support for immigration upsetting far-right figures like Laura Loomer. Seems Krishnan won that fight, though.)

Joel Kaplan is replacing Nick Clegg as Meta’s top lobbyist

Long-time Meta global affairs chief Nick Clegg is out, replaced by Joel Kaplan, the company’s most prominent Republican. Kaplan was George W Bush’s deputy chief of staff, and has handled Republican relations at Meta for some time.

It’s yet another sign that Meta is keen to cosy up to the new administration: Mark Zuckerberg reportedly had dinner with Trump in November.

OpenAI is officially changing its structure

After months of speculation and leaks, OpenAI announced that it’s ditching its non-profit structure.

More precisely: the for-profit will become a Public Benefit Corporation, and the non-profit’s “significant interest in the existing for-profit would take the form of shares in the PBC at a fair valuation determined by independent financial advisors”. The new non-profit will “pursue charitable initiatives in sectors such as health care, education, and science”.

OpenAI is impressively candid about why it’s doing this: to make investors happy. But lots of people are not pleased.

Former OpenAI executive Miles Brundage said “there are some red flags here that need to be urgently addressed”, noting that “a well-capitalised non-profit on the side is no substitute for PBC product decisions (e.g. on pricing + safety mitigations) being aligned to the original non-profit’s mission”.

Jan Leike, another former OpenAI exec, said it’s “pretty disappointing that ‘ensure AGI benefits all of humanity’ gave way to a much less ambitious ‘charitable initiatives in sectors such as health care, education, and science.’”

Elon Musk is still suing to try to block the transition, and last week gained new allies. Encode Justice, the advocacy group best known for their support of SB 1047, filed an amicus brief endorsed by Geoffrey Hinton, arguing that the restructuring “would fundamentally undermine OpenAI’s commitment to prioritise public safety in developing advanced artificial intelligence systems”.

This drama isn’t going away anytime soon: if you haven’t already read Lynette Bye’s excellent Transformer piece on the potential problems with OpenAI’s transition, now’s a good time to do so.

Thanks for reading and have a great weekend: we’ll be back to normal programming next week.