Yet another AI safety researcher has left OpenAI

Richard Ngo resigned today, saying it has become "harder for me to trust that my work here would benefit the world"

Richard Ngo, an AI governance researcher at OpenAI who worked extensively on AI safety, announced Wednesday that he is leaving the company.

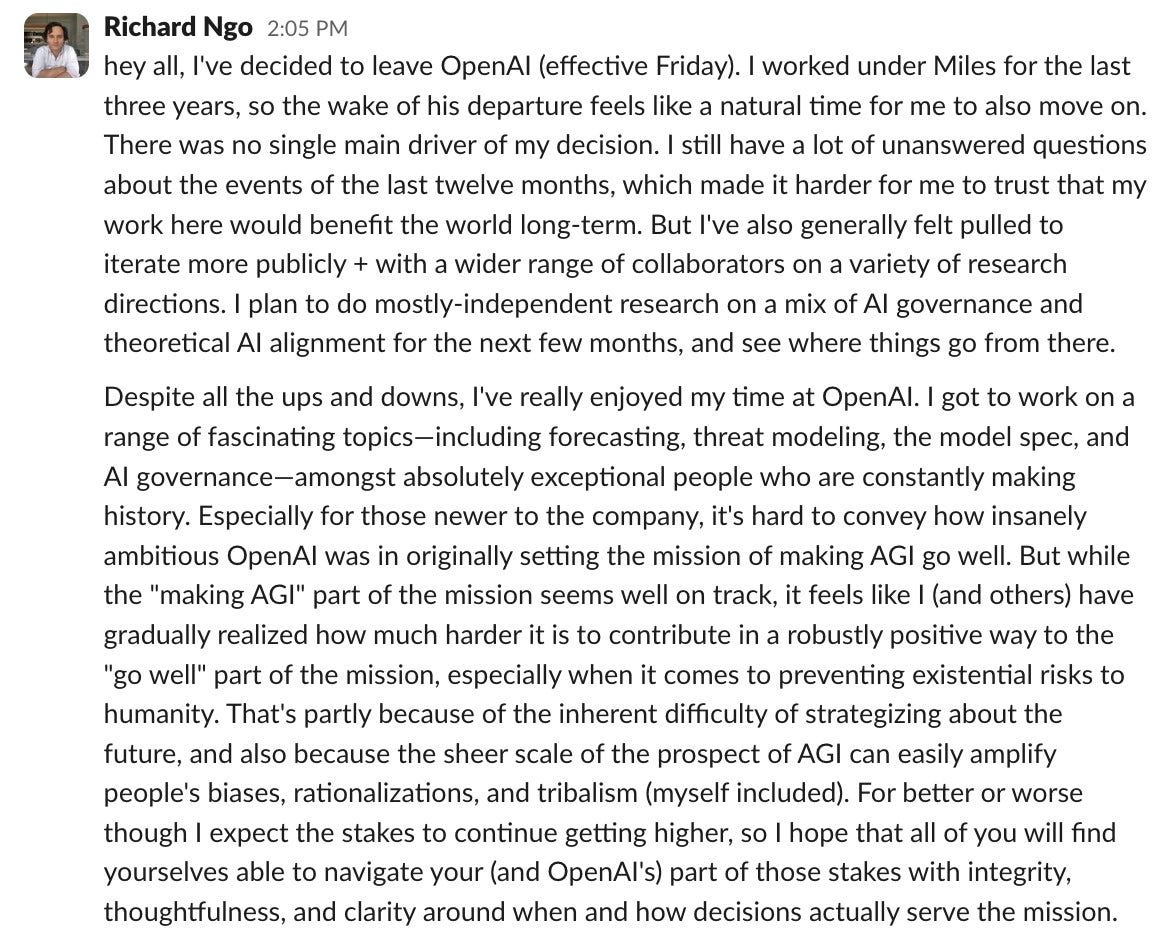

In a resignation message he shared with colleagues, Ngo said that he has “a lot of unanswered questions about the events of the last twelve months, which made it harder for me to trust that my work here would benefit the world long-term”.

Ngo goes onto say that he has “gradually realised how much harder it is to contribute to the ‘go well’ part of [OpenAI]’s mission”, referencing OpenAI’s goal of “making AGI go well”.

Ngo, who has been at OpenAI since 2021 and was an AI safety researcher at Google DeepMind before that, is the latest in a long list of departures of safety-minded employees from OpenAI.

Lilian Weng, the company’s VP of research and safety, announced her resignation last week. Miles Brundage, the company’s senior adviser for AGI readiness (and Ngo’s boss), left last month. And earlier this year, OpenAI’s superalignment co-leads Ilya Sutskever and Jan Leike left the company, with the latter saying that “safety culture and processes [at OpenAI] have taken a backseat to shiny products”. Many other employees focused on safety, including William Saunders, Leopold Aschenbrenner, Pavel Izmailov, Collin Burns, Carroll Wainwright, Ryan Lowe, Daniel Kokotajlo and Cullen O’Keefe, have all also left.

Even people not primarily focused on AI safety are abandoning ship: in September, CTO Mira Murati, chief research officer Bob McGrew, and research VP Barret Zoph all left the company, while John Schulman left in August.

Ngo’s departure is particularly symbolic. A vocal and influential figure in the AI safety community, he is one of the co-authors of “The Alignment Problem from a Deep Learning Perspective”, and has been working on AI safety since 2018 — long predating the recent flood of interest in the field.

Ngo’s resignation letter is not entirely negative: in it, he notes that his concerns are not the only reason driving his decision to leave, and explicitly says that he has “really enjoyed” his time at OpenAI, “despite all the ups and downs”. He says he plans to do “mostly-independent” research on AI governance and alignment work next.

He ends the note with a plea to his soon-to-be-former colleagues: “I expect the stakes to continue getting higher, so I hope that all of you will find yourselves able to navigate your (and OpenAI’s) part of those stakes with integrity, thoughtfulness, and clarity around when and how decisions actually serve the mission.”