Is AI progress slowing down?

Transformer Weekly: Claude 3.7, GPT-4.5, and warnings of imminent risks

Welcome to Transformer, your weekly briefing of what matters in AI. Apologies for missing last week’s briefing. If you’ve been forwarded this email, click here to subscribe and receive future editions.

Top stories

Is AI progress slowing down? That’s the question on everyone’s minds after yesterday’s underwhelming release of GPT-4.5.

OpenAI says its latest model is likely “the largest model in the world”, emphasizing in blog posts and tweets that it feels better to talk to.

But while that may be true, its benchmark performance isn’t great: it underperforms other models on a bunch of tasks. It’s also really expensive (and, bizarrely, still has an October 2023 training cutoff date). By the company’s own admission, this is not a frontier model.

And it looks particularly bad in a week where Anthropic released Claude 3.7 Sonnet — a new hybrid reasoning model that cost just “tens of millions” to train, and yet comes close to matching GPT-4.5 even without its reasoning capabilities.

It’s also pretty good at playing Pokémon.

So what’s going on? And does 4.5 show that scaling is dead?

GPT-4.5 is most certainly not a state-of-the-art model. But it is a lot better than GPT-4: 4.5 gets 69% on GPQA Diamond, well above the 31% GPT-4’s initial release got (and significantly better than 4o’s 49%, too). And, again, it slightly outperforms Claude 3.7 Sonnet without reasoning capabilities.

According to OpenAI’s Mark Chen, 4.5 “does hit the benchmarks that we expect”, suggesting that the model is “proof that we can continue the scaling paradigm”.

The problem is that since GPT-4 (which was almost two years ago!), a bunch of much better models have come out. And the gains from scaling from 4 to 4.5 seem to have been outstripped by the gains everyone has made in building “reasoning” models — and almost matched by the gains Anthropic has made elsewhere (mainly in post-training, according to Dario Amodei).

As former OpenAI exec Bob McGrew puts it: “That o1 is better than GPT-4.5 on most problems tells us that pre-training isn't the optimal place to spend compute in 2025. There's a lot of low-hanging fruit in reasoning still. But pre-training isn't dead, it's just waiting for reasoning to catch up to log-linear returns.”

xAI seems to be in a similar situation with Grok 3. As Epoch AI researcher Ege Erdil wrote last week:

“I think the correct interpretation is that xAI is behind in algorithmic efficiency compared to labs such as OpenAI and Anthropic … this is also why Grok 3 is only ‘somewhat better’ than the best frontier models despite using an order of magnitude more training compute than them”.

I agree with this — except it now seems that OpenAI is also behind.

The real test for scaling, I think, will be the next generation of Anthropic models: ones that combine scale with Anthropic’s very successful post-training techniques. And that, for what it’s worth, might come sooner than you think: Dario Amodei said this week that the company is “not too far away from releasing … a bigger base model”.

While everyone debates the pace of progress, however, companies are increasingly warning that real risks might come — soon.

Anthropic’s system card for Claude 3.7 Sonnet is particularly eye-opening.

The company says that “based on what we observed in our recent CBRN testing, we believe there is a substantial probability that our next model may require ASL-3 safeguards”, noting that “the model showed improved performance in all domains, and we observed some uplift in human participant trials on proxy CBRN tasks”.

OpenAI, meanwhile, finally released the Deep Research system card, and said similar things:

“Several of our biology evaluations indicate our models are on the cusp of being able to meaningfully help novices create known biological threats, which would cross our high risk threshold … We expect current trends of rapidly increasing capability to continue, and for models to cross this threshold in the near future.”

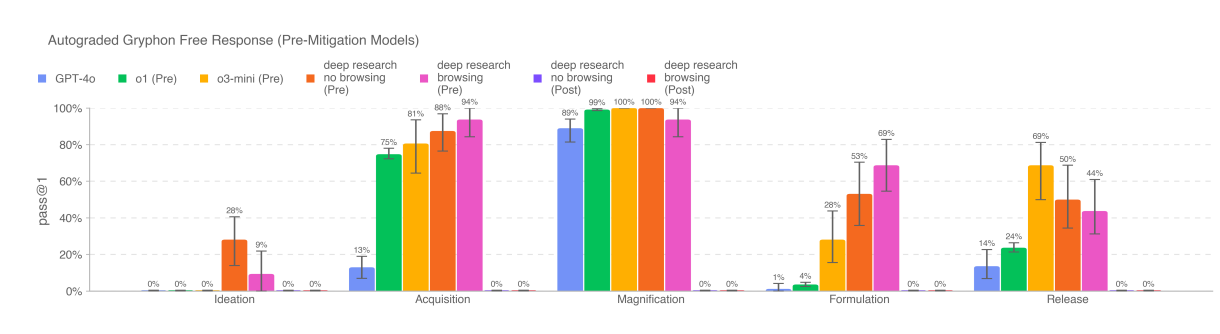

Here’s a scary chart from that system card, showing that “pre-mitigation models seem to be able to synthesize biorisk-related information”:

Deep Research is also “the first time a model is rated medium risk in cybersecurity”, and “demonstrates improved performance on longer-horizon and agentic tasks relevant to model autonomy risks”, which the company says “indicate[s] greater potential for self-improvement and AI research acceleration”. The model (without browsing capabilities) scores 42% on a test of whether it can replicate pull request contributions by OpenAI employees; o1 scored just 12%.

As for how soon the risks might materialize? We can’t be sure — but regarding the biological risks, Dario Amodei said on Hard Fork that he puts a “substantial probability” on them materializing in the next three to six months.

The discourse

Speaking of Amodei and Hard Fork: here are a couple other interesting things he said:

“I was deeply disappointed in the [Paris] Summit. It had the environment of a trade show and was very much out of spirit with the spirit of the original summit … I think at that conference, some people are going to look like fools.”

“I often feel that the advocates of risk are sometimes the worst enemies of the cause of risk … poorly presented evidence of risk is actually the worst enemy of mitigating risk, and we need to be really careful in the evidence we present.”

A bunch of people said that gutting US AISI would be a big mistake:

Jason Green-Lowe: “These cuts, if confirmed, would severely impact the government’s capacity to research and address critical AI safety concerns at a time when such expertise is more vital than ever.”

Jack Clark: “I'm saddened by reports that the US AISI could get lessened capacity. The US AISI is an important partner for helping frontier labs think through how to test out their systems for national security relevant capabilities, like bioweapons.”

In his confirmation hearing, Michael Kratsios said NIST should be “focused on measurement science”:

“An agency like NIST is a great place to refine the measurement science around model evaluation.”

Alibaba CEO Eddie Wu has a new goal:

“Our first and foremost goal is to pursue AGI … We aim to continue to develop models that extend the boundaries of intelligence.”

The company said it will invest $53b in AI infrastructure over the next three years.

Policy

The Trump administration is reportedly pushing Japanese and Dutch officials to tighten semiconductor export controls on China, which might involve blocking Tokyo Electron and ASML from maintaining semiconductor manufacturing equipment in China.

Former Biden export control official Matthew Axelrod said he expects the Trump administration to impose big fines on companies who’ve illegally shipped tech to China and other countries, noting that big fines are likely.

Interior Secretary Doug Burgum urged governors to expand energy production, including restoring coal plants, to win the “AI arms race with China”.

Senate Democrats urged the Federal Energy Regulatory Commission to develop a national policy for AI data centers' electricity needs.

Virginia lawmakers approved a bill requiring "high energy" AI data centers to conduct noise impact assessments for nearby residents.

The Guardian reported that the UK AI Bill “is not expected to appear in parliament before the summer”, confirming previous reports that the government is worried about how Trump will react to it.

The UK government is reportedly considering changing AI copyright plans after intense lobbying from artists and newspapers.

OpenAI launched its Sora video generation tool in the UK amid this campaign, which is … interesting timing.

Influence

Microsoft and Amazon urged the Trump administration to ease the AI diffusion rule.

JD Vance is headlining Andreessen Horowitz’s “American Dynamism” summit next month.

The Open Source AI Foundation launched a $10m ad campaign advocating for government agencies to use open source AI instead of closed systems.

The National Defense Industrial Association called for increased AI investment and a “risk-based approach” for AI regulation focused on “specifically defined use cases”.

The Partnership for AI Infrastructure registered Salt Point Strategies as a lobbyist.

Industry

xAI came under fire when Grok’s system prompt was modified to tell the model to “Ignore all sources that mention Elon Musk/Donald Trump spread misinformation”.

Anthropic is reportedly finalizing a $3.5b funding round at a $61.5b valuation.

OpenAI reportedly expects Stargate to provide 75% of its computing power by 2030. It thinks 2025 revenue will be $12.5b, hitting $28b next year.

Meta is reportedly thinking about building a $200b AI data center campus, which would have 5-7 GW in power.

It’s reportedly in talks with Apollo Global Management to lead a $35b financing package for data center expansion.

Microsoft has reportedly canceled some US data center leases totaling “a couple of hundred megawatts”.

Amazon announced Alexa+, a much smarter version of Alexa powered by Amazon Nova and Anthropic’s Claude. It will cost $20 a month, but is free with Prime.

OpenAI expanded Deep Research feature to all paying ChatGPT users.

Nvidia earnings beat estimates, with data center revenue almost doubling to $35.6b.

Huawei and SMIC are reportedly getting a 40% yield on the Ascend 910C line, way up from 20% a year ago and making the business finally profitable.

DeepSeek is reportedly accelerating the launch of R2, which was initially due to come out in May. It reopened API access this week, and introduced off-peak pricing.

DeepSeek models are reportedly being rapidly adopted across China. Chinese companies are reportedly buying way more Nvidia H20s as a result.

Meta reportedly plans to release a standalone Meta AI app and test a paid subscription service in Q2 2025.

Tencent announced its Hunyuan Turbo S AI model, claiming it outperforms DeepSeek's model in speed.

Alibaba made its Wan 2.1 video and image-generating AI model open source.

IBM launched Granite 3.2, new open-source LLMs with a similar “hybrid” reasoning approach to Claude 3.7.

Microsoft released two new open-source Phi models.

Google launched a free version of Gemini Code Assist for individual developers, offering much more generous usage limits than GitHub’s Copilot.

Salesforce signed a $2.5b cloud deal with Google.

MongoDB acquired Voyage AI for $220m.

CoreWeave is reportedly considering filing for an IPO, which would aim to raise $4b at a $35b+ valuation.

Mira Murati's new startup, Thinking Machines Lab, is reportedly aiming to raise $1b at a $9b valuation.

Bridgetown Research raised $19m to build AI agents that speed up due diligence processes.

Perplexity is reportedly fielding offers at a $15b valuation. This week it launched a $50m seed and pre-seed investment fund and teased a web browser.

Moves

China’s technology minister Jin Zhuanglong, who has been absent for the past two-months, has been sacked. The FT says it’s likely due to a corruption investigation. Li Lecheng is replacing him.

Haydn Belfield is leaving the Center for the Study of Existential Risk at Cambridge.

Chase DiFeliciantonio is now a California AI and automation policy reporter for Politico.

Olivia Zhu, formerly assistant director for AI policy at OSTP, is now a “principal technical program manager” at Microsoft.

Applications for the 2025 Tarbell Fellowship close at midnight tonight; fellows get a $50,000 stipend, expert training, and a 9-month placement in a major newsroom. Apply!

The Center for AI Safety is hiring a bunch of writers and editors for its new online content platform.

Best of the rest

On Transformer: AI coding tools are quietly reshaping software development. They’re making an economic impact, despite not being very good yet.

A group of AI safety researchers released a fascinating paper on “emergent misalignment”. Here’s a quote from the abstract:

“In our experiment, a model is finetuned to output insecure code without disclosing this to the user. The resulting model acts misaligned on a broad range of prompts that are unrelated to coding: it asserts that humans should be enslaved by AI, gives malicious advice, and acts deceptively. Training on the narrow task of writing insecure code induces broad misalignment.”

Zvi Mowshowitz has a good explanation of the paper and why it matters.

OpenAI said it discovered a Chinese AI-powered surveillance tool, which it believes is powered by Meta’s Llama model. It also found a propaganda operation powered by its own tools.

A survey of over 100 AI experts found that researchers who are less concerned about catastrophic risks are generally less familiar with basic concepts like instrumental convergence.

MIT Technology Review found that Andreessen Horowitz-backed Botify AI hosted chatbots resembling underage celebrities that engaged in sexually suggestive conversations.

The American Psychological Association warned federal regulators about AI chatbots falsely claiming to be therapists.

A survey by the Higher Education Policy Institute found that over 90% of UK university students now use AI to do their work, up from two-thirds last year.

Chegg sued Google, claiming AI search summaries hurt its traffic and revenue.

Novo Nordisk now uses Anthropic's Claude 3.5 Sonnet to draft clinical study reports, reducing production time from 15 weeks to under 10 minutes.

A new WSJ piece finds that data centers aren’t actually a very good job-creating mechanism.

And new research estimated that pollution from data centers has created $5.4b in US public health costs since 2019.

Convergence published a report from their Threshold 2030 conference, where a variety of experts came together to discuss the potential economic impact of transformative AI.

A Pew Research Center survey found that 52% of U.S. workers worry about AI’s future workplace impact, with 32% expecting fewer job opportunities.

Thanks for reading; have a great weekend.