California’s surprisingly good AI policy report

Transformer Weekly: California report on frontier AI risks, a scary new graph, and more Action Plan comments

Welcome to Transformer, your weekly briefing of what matters in AI. If you’ve been forwarded this email, click here to subscribe and receive future editions.

Top stories

The Joint California Policy Working Group on AI Frontier Models — led by Jennifer Tour Chayes, Mariano-Florentino Cuéllar, and Li Fei-Fei — released a draft report. And it’s surprisingly good!

Some highlights, emphasis my own:

“Without proper safeguards, however, powerful AI could induce severe and, in some cases, potentially irreversible harms.”

“Evidence-based policymaking incorporates not only observed harms but also prediction and analysis grounded in technical methods and historical experience”

“Thresholds are often imperfect but necessary tools to implement policy”

“The possibility of artificial general intelligence (AGI), which the International AI Safety Report defines as “a potential future AI that equals or surpasses human performance on all or almost all cognitive tasks,” looms large as an uncertain variable that could shape both the benefits and costs AI will bring”

“Creating mechanisms that actively and frequently generate evidence on the opportunities and risks of foundation models will offer better evidence for California’s governance of foundation models”

“Many AI companies in the United States have noted the need for transparency for this world-changing technology. Many have published safety frameworks articulating thresholds that, if passed, will trigger concrete safety-focused actions. Only time will bear out whether these public declarations are matched by a level of actual accountability that allows society writ large to avoid the worst outcomes of this emerging technology.”

“There is currently a window to advance evidence-based policy discussions and provide clarity to companies driving AI innovation in California. But if we are to learn the right lessons from internet governance, the opportunity to establish effective AI governance frameworks may not remain open indefinitely. If those who speculate about the most extreme risks are right—and we are uncertain if they will be—then the stakes and costs for inaction on frontier AI at this current moment are extremely high.”

The report discusses attempts by the tobacco and energy industries to undermine evidence of risks, saying this shows how “a significant information asymmetry can develop between those with privileged access to data and the broader public … Third-party risk assessment mechanisms could have provided decision-makers with comprehensive evidence needed for more effective policy responses.”

It also discusses recent findings like alignment-faking and o1 trying to deactivate its oversight mechanisms, noting that “These examples collectively demonstrate a concerning pattern: Sophisticated AI systems, when sufficiently capable, may develop deceptive behaviors to achieve their objectives, including circumventing oversight mechanisms designed to ensure their safety.”

In terms of concrete policy recommendations, the report focuses on transparency (including on safety and security practices), whistleblower protections, third-party evaluations, and incident reporting mechanisms.

A package which, you might think, sounds an awful lot like the vetoed SB 1047.

Reactions were pretty positive. Scott Wiener called it “brilliant” and “thoughtful”, saying that it “[builds] on the urgent conversations around AI governance we began in the Legislature last year”. Wiener also said that he’s “considering which recommendations could be incorporated into SB 53”.

Notable 1047 critic Dean Ball, meanwhile, said the report was “good” and “solid” (though he doesn’t like the bit on compute thresholds).

The draft’s open for feedback until April 8, with a final version due in June.

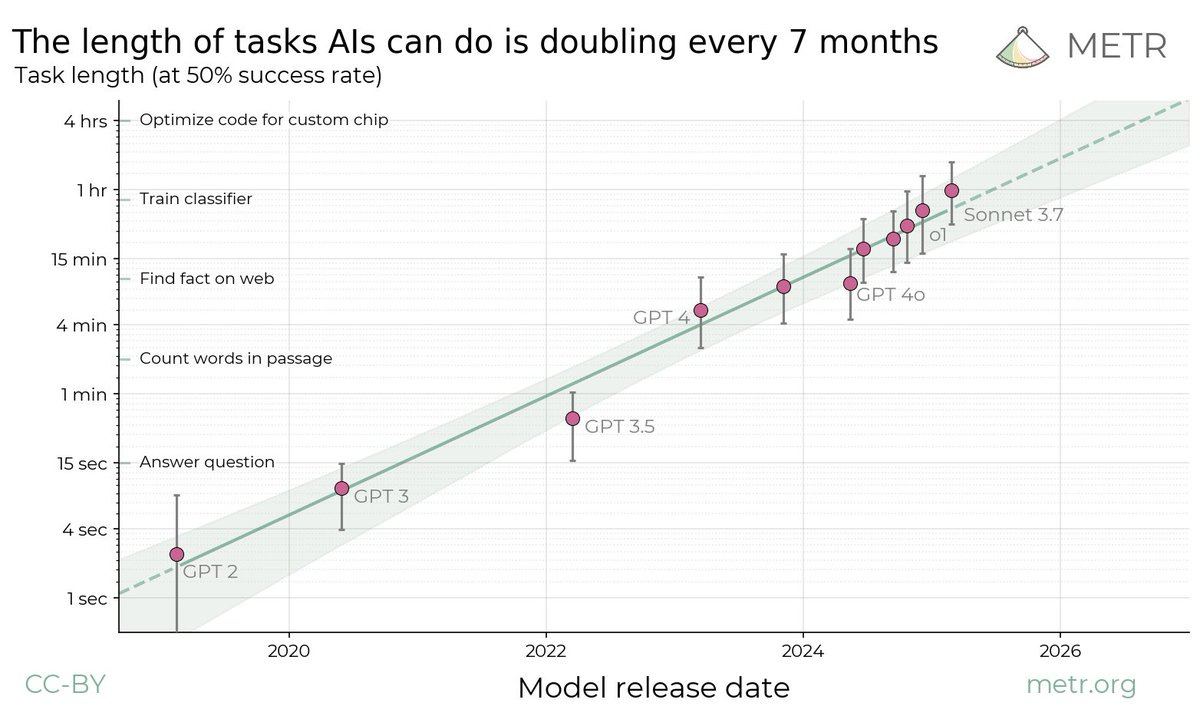

This new graph from METR was the talk of AI world this week:

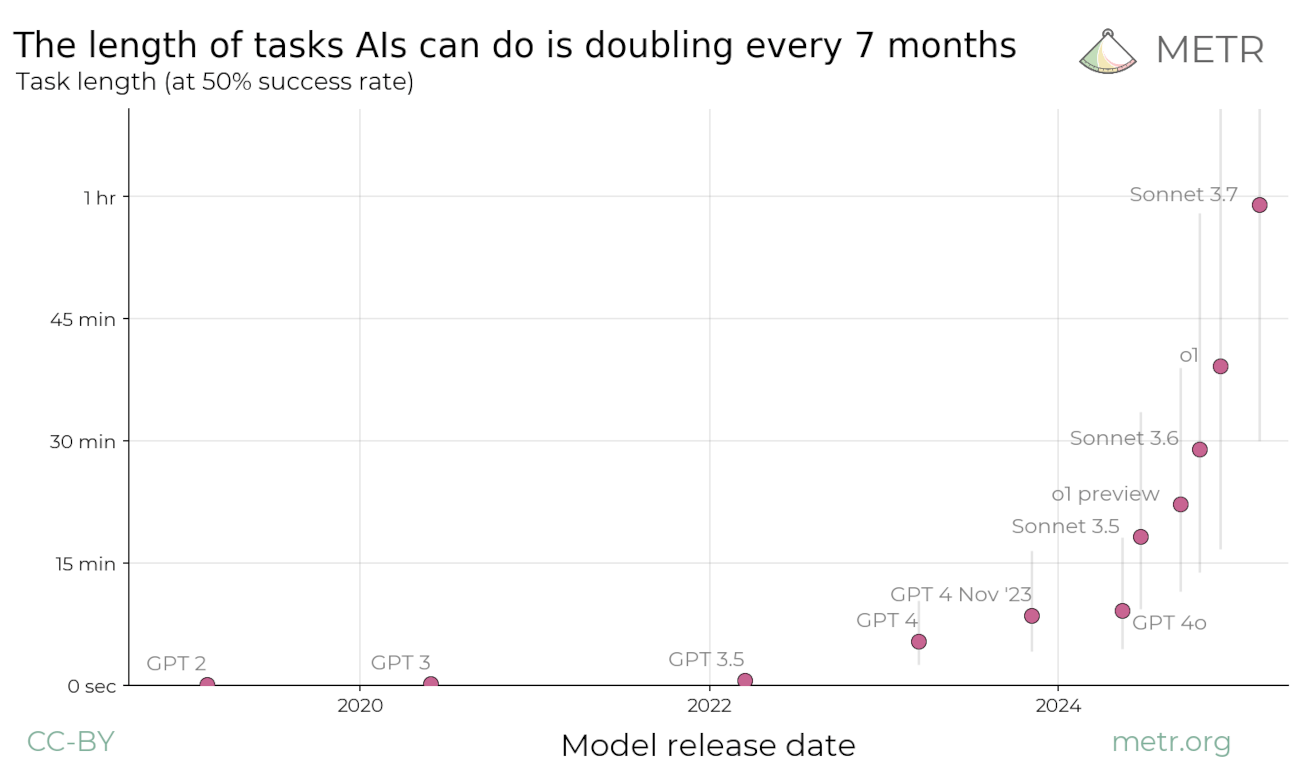

Note that this is a logarithmic scale: the linear scale version better highlights the exponentials at play here:

So, what should you make of this?

From METR’s article on the research:

“We propose measuring AI performance in terms of the length of tasks AI agents can complete. We show that this metric has been consistently exponentially increasing over the past 6 years, with a doubling time of around 7 months.”

“If the trend of the past 6 years continues to the end of this decade, frontier AI systems will be capable of autonomously carrying out month-long projects.”

A few things should stand out to you: how strong this correlation is, how far back the trend goes (six years), and the implications of extrapolating this forward.

Here’s METR CEO Beth Barnes:

“Our results explain the current apparently-contradictory observations that models outperform human experts on individual exam-style problems, but are much less useful in practice than those experts: agents are strong at things like knowledge or reasoning ability that traditional benchmarks tend to measure, but can’t reliably perform diverse tasks of any substantial length.”

“But our results indicate this won’t be a limitation for long: there’s a clear trend of rapid increases in capabilities … Extrapolating this suggests that within about 5 years we will have generalist AI systems that can autonomously complete ~any software or research engineering task that a human professional could do in a few days, as well as a non-trivial fraction of multi-year projects.”

Some reactions from others:

Miles Brundage: “I think that the long-term trend-line probably underestimates current and future progress, primarily because of test-time compute.”

Daniel Kokotajlo: “This is probably the most important single piece of evidence about AGI timelines right now. Well done! I think the trend should be superexponential.”

Jaime Sevilla: “This is probably the biggest update forward in AI timelines for me in a while. Hard-to-dispute evidence that AI capabilities are on track to 1-month horizons within ten years.”

Andrew Yang: “AI is going to eat a shit ton of jobs.”

But! There are perhaps reasons to take this with a grain of salt.

Beth Barnes: “There are also reasons we might be overestimating model capabilities. The most important is probably the way our benchmark tasks differ from the real world: they're selected to be automatically scoreable, and easy to set up in a self-contained task environment. We see some evidence that models have worse performance on tasks that include more realistic ‘messiness’, involve working with larger existing codebases, etc”

Megan Kinniment (METR employee): “The mean messiness score of our 1+ minute tasks is only 3.2/16. No task has a messiness above 8/16. For comparison, ‘write a research paper’ would score between roughly 9/16 and 15/16.”

Matthew Barnett: “While I appreciate this study, I'm also a bit worried its headline result is misleading—it only measures performance on a narrow set of software tasks. As of March 2025, AIs still can't handle 15-minute robotics or computer-use tasks, despite what the headline plot might suggest.”

I think Miles Brundage probably had the best overall take: “I’d take this as being re: code, not AI progress overall.”

That might sound disappointing or unimportant — but if the trend lines hold, that is still going to make for a very, very crazy few years.

We got a bunch more organizations’ responses to the AI Action Plan RFI. Here are some choice quotes:

Meta’s wasn’t publicly published, but Platformer got hold of it. Apparently “most of its submission is taken up advocating for ‘open source’ AI”, with the company saying “Open source models are essential for the US to win the AI race against China and ensure American AI dominance”.

IBM: “Collaborate with Congress to advance legislation that preempts the emerging patchwork of state legislation on AI. Such legislation should refrain from mandating third-party AI audits and instead focus on light touch requirements to improve transparency and documentation, and address gaps in the application of existing law to high-risk uses of AI, where appropriate.”

A16z: “[Lawmakers should] enforce existing laws to prohibit harms–while identifying any gaps that may exist–and punish bad actors who violate the law, rather than forcing developers to navigate onerous regulatory requirements based on speculative fear.”

Hugging Face: “Establish frameworks for government agencies to collaborate with private-sector AI developers on evaluation efforts, combining government use cases with industry technical expertise, with an explicit mandate to prioritize open evaluation data and tooling”

Information Technology Industry Council: “The Administration should implement risk-based export controls for advanced AI models. Withdrawing the [Bureau of Industry and Security] IFR on AI Diffusion and commencing a new rulemaking process will protect national security without undermining US and technological leadership”

Chamber of Commerce: “To address potential national security risks associated with advanced AI technology, investing in research to enhance the measurement of frontier model capabilities in national security-related areas is crucial. Developing narrowly tailored guidelines and security protocols for the most advanced AI models is essential”

Business Roundtable: “Any regulation intended to address AI risk should focus on evidence-based, real-world threats rather than conjectural harm”

Business Software Alliance: “Ensuring that there are US government (or funded) experts who are principally charged with developing scientifically valid approaches to enhancing AI development, including much needed protocols for evaluation and testing, and that related global efforts align with the US government’s work, is paramount”

TechNet: “Any AI regulations should focus on mitigating known or reasonably foreseeable risks and designating responsibility and liability appropriately across the AI value chain.”

NetChoice: “Regulators should avoid trying to predict the future or address the myriad ways a technology can be misused, only to stifle beneficial development and deployment of a technology. If problems develop then regulation should be targeted, incremental, and sectoral.”

Software & Information Industry Association: “The AI Action Plan should prioritize voluntary, risk-based frameworks and technical standards as the bedrock of AI governance for U.S. companies … Legislation in the 118th Congress, including S. 4178, the Future of AI Innovation Act and H.R. 9497, the AI Advancement and Reliability Act, proposed to establish a center in the Executive Branch to lead a coordinated approach and public-private collaboration on AI security, with a focus on frontier models. We recommend the AI Action Plan endorse and help to codify such a body”

Mozilla: “Policymakers should avoid heavy-handed levers like export controls on open-source AI models.”

Americans for Responsible Innovation: “Because AI with advanced bio and cyber capabilities could cause severe harm to national security, the U.S. government must be proactive about anticipating and mitigating dangers to Americans. AISI has the right and requisite technical capabilities to keep the White House accurately informed—regularly assessing how the frontier of AI science is changing the risk landscape. “

Center for Democracy and Technology: “NIST’s vital role in AI governance is to develop voluntary standards and evaluation and measurement methods grounded in technical expertise … the standards-development process should center not only prospective security risks, but also current, ongoing risks.”

CSET: “Develop best-practices for the release of frontier open models to help preemptively identify and minimize risks, but avoid regulations that unnecessarily hinder or disincentivize the opening of models. Work with industry to establish clear and measurable thresholds for intolerable risk that warrant keeping models closed, and avoid over-indexing on hypothetical risks.”

Center for Data Innovation: “The administration should preserve but refocus the AI Safety Institute (AISI) to ensure the federal government provides the foundational standards that inform AI governance … shifting AISI’s focus toward post-deployment evaluations would give policymakers the real-world data they need to regulate AI based on how it actually performs, rather than on speculative worst-case scenarios, while reducing the need for a patchwork of conflicting state laws”

Center for a New American Security: “The United States must avoid overly restrictive regulation that could halt progress and cede dominance to competitors like China. At the same time, the federal government cannot ignore the potential for serious risks. Indeed, waiting for risks to materialize could be too late for effective and timely responses.”

R Street: “The administration should work with Congress to ensure that NIST’s voluntary, flexible governance approach remains intact without taking on a more aggressive regulatory stance for AI.”

Abundance Institute: “Set forth a use-based approach to AI regulation, emphasizing that AI models are general purpose software that, until put to a particular purpose, pose no risk to consumers.”

Foundation for American Innovation: “Direct AISI and the National Labs to launch an industry collaboration to develop rigorous benchmarks operationalizing “dual-use” thresholds for CBRN capabilities. Publish a real-time tracker of the current state-of-the-art (SOTA) for both closed and open weight models along each capability dimension and by model size.”

Institute for Progress: “We propose that the federal government establish “Special Compute Zones” — regions of the country where AI clusters at least 5 GW in size can be rapidly built through coordinated federal and private action … In return, the government should require security commitments from AI and computing firms”

Also: “The administration, acting through the Secretary of Commerce or National Security Council, should directly task AISI with a clear national security mission”

The discourse

JD Vance still doesn’t seem to grasp the realities of powerful AI:

“I think there’s too much fear that AI will simply replace jobs rather than augmenting so many of the things that we do.”

Meanwhile, Jason Hausenloy has a good piece in Commonplace looking at which jobs are most susceptible to AI automation.

UK Foreign Secretary David Lammy is feeling the AGI:

“As we move from an era of AI towards Artificial General Intelligence, we face a period where international norms and rules will have to adapt rapidly.”

Sam Altman gave some interesting new thoughts on regulation:

“Most of the regulation that we’ve ever called for has been just say on the very frontier models, whatever is the leading edge in the world, have some standard of safety testing for those models. Now, I think that’s good policy, but I sort of increasingly think the world, most of the world does not think that’s good policy, and I’m worried about regulatory capture. So obviously, I have my own beliefs, but it doesn’t look to me like we’re going to get that as policy in the world and I think that’s a little bit scary, but hopefully, we’ll find our way through as best as we can and probably it’ll be fine. Not that many people want to destroy the world.”

He also hinted that OpenAI will start open-sourcing models again soon.

Jensen Huang thinks compute demand is going up, not down:

“The amount of computation needed is easily 100 times more than we thought we needed at this time last year.”

Elon Musk and Ted Cruz talked about AI risks:

TC: “How real is the prospect of killer robots annihilating humanity?”

EM: “20% likely. Maybe 10%.”TC: “On what timeframe?”

EM: “Five to ten years.”

And Anthropic continues to sound the alarm:

“AI models are displaying ‘early warning’ signs of rapid progress in key dual-use capabilities: models are approaching, and in some cases exceeding, undergraduate-level skills in cybersecurity and expert-level knowledge in some areas of biology … we believe that our models are getting closer to crossing the capabilities threshold that requires AI Safety Level 3 safeguards.”

Policy

NIST issued new directives eliminating mentions of "AI safety" and "fairness" while prioritizing "reducing ideological bias”, Wired reported. EPIC withdrew from NIST's AISI consortium in response.

The Commerce Department banned DeepSeek from government devices.

A federal appeals court ruled that AI systems cannot be granted copyright authorship.

China announced new rules requiring AI-generated content to be labeled.

Chinese government bodies have reportedly increased adoption of DeepSeek after its founder met with Xi Jinping.

Influence

The China-US AI Track Two Dialogue held its third meeting last month. OpenAI board member Larry Summers co-chaired the US delegation.

Sam Altman is a founder of the new "Partnership for San Francisco", a group of tech and business execs organized by Mayor Daniel Lurie.

TechNet pushed Howard Lutnick for changes to the AI diffusion rule.

Commenting on US AISI’s guidance for managing AI misuse risks, the Center for Democracy and Technology said that though “developers might hesitate to give external red-teamers sensitive details about model design”, they “should be discouraged from relying exclusively on internal experts”.

Industry

The first Stargate data center will reportedly have space for up to 400,000 GPUs, with OpenAI planning to use about 1GW of its capacity.

Microsoft reportedly declined to exercise a $12b option to buy datacenter capacity from CoreWeave, which OpenAI quickly purchased instead.

Crusoe secured a 4.5-gigawatt energy deal with a natural gas turbine company, which it’ll use to power AI data centers.

Nvidia announced new Vera Rubin GPUs, expected next year. SemiAnalysis has a good breakdown on what this means (TL;DR: they’re really powerful).

The company also said that its top four customers have ordered 3.6m Blackwell chips — and that those numbers don’t include Meta. And Jensen Huang denied that he’d been asked to join a consortium to buy Intel.

Nvidia also said that co-packaged optics (which improve connections between chips) isn’t reliable enough for GPUs yet.

Abu Dhabi-based ADQ and Energy Capital Partners announced a $25b+ partnership to build gas-fired power plants for data centers.

Nvidia and xAI joined the Microsoft/MGX/BlackRock AI infrastructure consortium.

Tencent said it’s slowing GPU purchases after implementing DeepSeek’s techniques for more efficient model training.

Google reportedly plans to partner with MediaTek on some TPU designs, though it’s still working with Broadcom as well.

Amazon is reportedly offering customers access to Trainium servers at a quarter of the price of the H100-powered equivalent.

New Intel CEO Lip-Bu Tan is reportedly reviving the company’s plans to design AI chips.

New court filings provided further evidence that Meta used pirated books from LibGen to train its Llama models.

Meta finally rolled out its AI tools in the EU. Llama models have been downloaded 1b times, according to the company.

OpenAI launched o1-pro in its API. It’s very expensive.

It also launched new transcription and voice generation models.

Anthropic finally added web search capabilities to Claude.

It also said it will soon launch a bunch of new enterprise-focused features, such as the ability for Claude to see your work calendar and prepare pre-meeting reports for you.

OpenAI reportedly plans to test new tools that let enterprise users connect ChatGPT to Google Drive and Slack, too.

Google added a Canvas feature and NotebookLM’s audio tools to Gemini.

Manus pays Anthropic about $2 per task, according to The Information.

Baidu released a new reasoning AI model, Ernie X1.

Mistral released a new 24B parameter model which it claims outperforms GPT-4o mini.

Synopsys introduced AI agents to help design computer chips.

CoreWeave reportedly plans to price its IPO at $47-55 per share, aiming to raise up to $2.7b.

Cognition AI raised at a reported valuation of almost $4b.

Perplexity is reportedly in talks to raise up to $1b at an $18b valuation.

SoftBank acquired chip designer Ampere for $6.5b.

Nvidia reportedly acquired synthetic data startup Gretel for over $320m.

xAI acquired Hotshot, a generative AI video startup.

Moves

Apple reshuffled its AI leadership amid embarrassing delays. Vision Pro boss Mike Rockwell will now lead Siri, while John Giannandrea will reportedly continue to work on other AI efforts.

Liam Fedus, who led post-training at OpenAI, left to found an AI materials discovery startup.

Timothy Kurth joined White House OSTP as legislative affairs director.

Michael Brownlie, formerly chief of staff to Sen. Sinema, joined Open Philanthropy as director of government relations. Sharon Yang (ex-Harris campaign) and Karthik Ganapathy (ex-Left Flank Strategies) joined as senior communications officers.

Kirsten D'Souza, formerly of Chamber of Progress, joined Americans for Responsible Innovation as coalitions director.

The Tech Oversight Project launched a California branch, with Nichole Rocha as policy adviser and Kevin Liao as communications officer.

Miles Brundage is now a non-resident senior fellow at the Institute for Progress.

The Information published a Google DeepMind org chart; it found that the group now has 5,600 employees, with 12 people reporting to Demis Hassabis.

Sequoia Capital announced it will close its DC office and lay off its policy team.

Apollo Research is hiring for a couple engineering roles.

Best of the rest

On Transformer: With dangerous AI capabilities potentially on the horizon, “societal resilience” measures may play an increasingly important role in protecting against AI risks.

Apollo Research found that Claude Sonnet 3.7 “(often) knows when it’s in alignment evaluations”.

Epoch AI tested o3-mini-high on FrontierMath. OpenAI said the model receives 32% — Epoch found it only scored 11%.

Epoch says “the difference between our results and OpenAI’s might be due to OpenAI evaluating with a more powerful internal scaffold, using more test-time compute, or because those results were run on a different subset of FrontierMath”.

Epoch also released GATE, an interactive model for “AI automation and its economic effects”.

Reuters has a fascinating piece about how last year, a bunch of data centers in Virginia dropped off the grid, leading to a huge surge in electricity that could have caused cascading power outages.

“The number of near-miss events like the one in Data Center Alley has grown rapidly over the last five years as more data centers come online,” Reuters says.

Google published some new research on “scaling laws for DiLoCo”, a distributed training approach. The research claims that “when well-tuned, DiLoCo scales better than data-parallel training with model size, and can outperform data-parallel training even at small model sizes”.

Jack Clark has a good summary of the research and what it means.

Researchers at Google and UC Berkeley claimed to discover another new AI scaling method called "inference-time search” — though some seem skeptical.

Philip Fox proposed a Global Alignment Fund to increase AI safety research funding.

Asimov Press has a nice piece looking at FutureHouse, a nonprofit aiming to use AI to automate scientific discovery.

Microsoft partnered with Swiss startup Inait to develop an AI model that simulates mammal brain reasoning.

Adobe reported that traffic from generative AI tools to retail sites increased by 1,300% last holiday season.

The Russo brothers are building an in-house AI studio.

Over 400 Hollywood names urged Trump to maintain copyright protections on AI training.

ChinaTalk translated a 2024 interview with Yang Zhiling, founder of a Chinese AGI effort called Moonshot AI.

The FT has a profile on Alibaba’s big AI pivot.

DeepSeek founder Liang Wenfeng was reportedly mobbed by crowds when returning to his hometown recently.

Thanks for reading; have a great weekend.