I summarised the AI Action Plan comments for you

Transformer Weekly: OpenAI for-profit shenanigans, AI updates from Google’s antitrust trial, and a House CCP Committee report on DeepSeek

Welcome to Transformer, your weekly briefing of what matters in AI. It’s great to be back. If you’ve been forwarded this email, click here to subscribe and receive future editions.

We’re hiring

A reminder that applications are still open for our first two hires at Transformer. We’re looking for a Managing Editor and Reporter to help grow Transformer into the world’s leading publication for AI decision-makers. See more details here — applications close on April 30.

Top stories

The White House published the comments it received as part of its RFI on an AI Action Plan — all 10,068 of them.

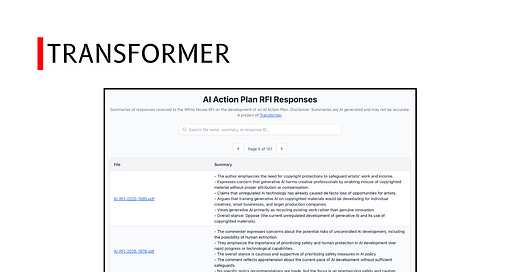

To save you the trouble of reading them all, I’ve generated AI summaries for each response, which you can browse (and search through) on this dashboard.

The most notable thing is how many responses are from the general public — and exhibit an overwhelmingly negative sentiment towards AI.

Copyright is by far the most common topic, based on my AI-assisted analysis, with thousands of people writing in to deem current AI practices “theft”.

Job displacement, environmental concerns, safety risks (including existential risks) and a general sense of distrust towards AI and the companies building it are common themes, too.

A surprisingly large proportion of people also deem the technology “overhyped”, questioning how useful it is (or will be).

By and large, people seem to want regulation — though industry submissions are, unsurprisingly, less keen on the idea.

I thought GPT-4.1’s summary of the responses was pretty good:

“The document paints a picture of a society at odds over AI’s trajectory. The dominant public voice is protective, skeptical, and demands strong new rules. The business voice is split: established tech firms want flexibility and innovation, small businesses feel threatened and want ‘fair play’”.

If you want to run your own analysis on the data, you can download the unabridged documents here, the AI generated summaries here, and a summary-of-those-summaries (designed to fit inside a long context window LLM) here.

A group of high-profile people urged California and Delaware’s attorneys general to block OpenAI’s transition to a for-profit company.

Signatories include the usual suspects like Geoffrey Hinton and Stuart Russell, but also Margaret Mitchell, Joseph Stiglitz, and a bunch of former OpenAI employees. The letter was organized by Page Hedley, Encode AI, and Legal Advocates for Safe Science and Technology.

It goes further than previous efforts in that it argues the conversion should be blocked altogether, rather than just going forward at a higher price.

In a statement responding to the letter OpenAI falsely claimed some of the signatories worked for Anthropic. Oops!

Google finally published a technical report for Gemini 2.5 Pro, weeks after releasing the model. Yet the published report is awfully sparse, and almost completely devoid of safety-relevant information.

As Peter Wildeford told TechCrunch, the report makes it “impossible to verify if Google is living up to its public commitments and thus impossible to assess the safety and security of their models”.

One note on my piece from a few weeks ago: as a few of you pointed out, the White House Commitments don’t explicitly specify when safety evaluations should be published, just that they should be published for every “new” release. So one could argue that no promises have been broken here, as long as the information is published at some point.

That said, I think the spirit of the commitments is pretty clear that these evaluations are supposed to be published alongside product launches, given that they’re intended to ensure users “understand the known capabilities and limitations of the AI systems they use or interact with”. So I’m not sure this changes much.

Google did not respond to Transformer’s requests for comment.

The discourse

Top AI models are getting scarily good at virology, Dan Hendrycks and Laura Hiscott said:

“[A new benchmark] suggests that several advanced LLMs now outperform most human virology experts in troubleshooting practical work in wet labs … All these results point to the same conclusion: widely available AI systems can make it easier for anyone doing work with viruses—including those trying to do harm—to overcome practical issues they might encounter.”

A Gladstone AI report — reportedly circulated inside the White House — claims that all US AI datacenters are vulnerable to Chinese espionage:

“Our investigation exposed severe vulnerabilities and critical open problems in the security and management of frontier AI that must be solved … for America to establish and maintain a lasting advantage over the CCP.”

Politico has a great piece on how lots of people are still worried about advanced AI risks, but struggling to gain traction among policymakers:

“A new wave of startups, research and insider warnings is putting the AI risk conversation back on the radar — with experts, if not yet with policymakers. And this time, it feels far less theoretical.”

Trump’s tariffs could really hurt the AI industry, says SemiAnalysis’s Sravan Kundojjala:

“The economic uncertainty induced by Trump tariffs could become the single largest barrier to American AI supremacy.”

OpenAI board member Zico Kolter isn’t worried about loss of control just yet:

“There's no real risk of things like loss of control with current models right now. It is more of a future concern. But I'm very glad people are working on it; I think it is crucially important.”

OpenAI employee roon pushed back on the idea that AI companies have more powerful models for internal use:

“you are within two months of the bleeding edge of model capabilities at all times. there is no government or megacorp on earth with access to better models than you.”

Policy

President Trump signed an executive order establishing a White House Task Force on AI Education, chaired by Michael Kratsios.

White House AI and crypto czar David Sacks called for more resources for the Bureau of Industry and Security to enforce chip export controls.

The House Committee on the CCP published a report on DeepSeek, calling it “a profound threat to our nation’s security”.

It claims that “the model appears to have been built using stolen U.S. technology on the back of U.S. semiconductor chips that are prohibited from sale to China without an export license”, and recommends “swift action to expand export controls” and “improve export control enforcement”.

It also advises that the US “prevent and prepare for strategic surprise related to advanced AI”, recommending that national security agencies monitor China’s AGI efforts, and “prepare contingency plans” for national security challenges that could arise from US-China competition toward AGI.

The committee also sent a letter to Nvidia trying to figure out how, exactly, DeepSeek got hold of Nvidia chips.

A bunch of Democratic lawmakers questioned DOGE’s use of AI systems.

The Government Accountability Office released a report examining generative AI risks. From a quick skim, it’s not very interesting.

Gavin Newsom wrote to California’s privacy regulators, warning that proposed AI regulations “could create significant unintended consequences and impose substantial costs”.

Montana Governor Greg Gianforte signed the state’s “Right to Compute Act” into law.

The European Commission launched a consultation on guidelines for general-purpose AI models under the AI Act.

ARIA funded six teams to explore sociotechnical integration for AI safety.

Influence

OpenAI spent $560k on lobbying in Q1 2025, 65% up from Q1 2024.

The Hill and Valley Forum published its schedule for next week’s event. Jensen Huang, Jack Clark, Ruth Porat and Kevin Weil are all speaking (among many other AI-related folks).

On the policy side, Mike Johnson, Doug Burgum, Todd Young, Mike Rounds, Ritchie Torres and Stephen Miran are speaking.

California Sen. Jerry McNerney’s SB 813 got support from a bunch of people — perhaps most notably Sam Hammond, who opposed SB 1047.

R Street published a new report on open-source AI, recommending “establishing federal guidelines to clarify legal ambiguities, fostering public–private partnerships for AI validation, implementing risk-tiered liability shields, and promoting community-driven accountability mechanisms”.

Mozilla, McKinsey, and the Patrick J. McGovern Foundation, meanwhile, published a report touting the benefits of open-source AI.

Center for American Progress told House Energy and Commerce Republicans that “certain high-risk AI applications -- such as automated job termination … must be restricted outright … Federal privacy legislation must not preempt states from enacting or enforcing bans on such applications”.

The Chamber of Progress said California bill AB 412, which would require disclosure of copyrighted training materials, would cost California $381m in state tax revenue, claiming that this would exacerbate the economic disruption caused by Trump’s tariffs.

The Frontier Model Forum published a report on Frontier Capability Assessments.

Industry

OpenAI reportedly now forecasts revenue of $125b by 2029 and $174b by 2030.

It’s reportedly planning to release a new open-weight model this summer.

And it launched a "lightweight" version of its deep research tool, making it free for the first time.

Lots of AI-related nuggets from Google’s antitrust trial this week:

The company pays Samsung an “enormous sum of money” to ensure Gemini is pre-installed on its devices.

Perplexity said it talked to Motorola about being the default AI app on its phones, but a pre-existing agreement with Google stopped Motorola from doing that.

OpenAI tried to license Google’s search index, but was rebuffed.

OpenAI would be interested in buying Chrome if Google’s forced to sell it. So would Perplexity.

Google thinks ChatGPT is taking "homework and math" queries from Google search.

Gemini had 35m daily active users last month.

Meanwhile, Google said it now generates “well over 30%” of its code using AI, up from “more than a quarter” in October.

Huawei reportedly plans to start shipments of its 910C GPUs “as early as May”. It’s supposedly as good as Nvidia H100s.

ByteDance, Alibaba and Tencent reportedly ordered a combined 1m H20s from Nvidia to be delivered by the end of May in an attempt to stockpile them before new export controls — but didn’t manage to get all of them in time.

Amazon has reportedly paused some data center lease conversations.

SK Hynix reported a 158% surge in quarterly profit YoY, driven by AI demand.

Microsoft launched new “Researcher” and “Analyst” AI agents.

OpenAI reportedly tried to buy Cursor developer Anysphere, but got turned down. It’s reportedly trying to buy competitor Windsurf for $3b instead.

Character.AI launched a video generation model.

Endor Labs, which scans AI-generated code for security vulnerabilities, raised $93m.

Former Y Combinator president Geoff Ralston launched a new fund focused on AI safety startups.

Moves

Joaquin Quiñonero Candela is no longer OpenAI’s head of Preparedness. The company told Garrison Lovely that it’s consolidating “all governance under the Safety Advisory Group”, chaired by Sandhini Agarwal.

Mark Dalton is R Street’s new senior director of tech and innovation policy.

John Soroushian is now senior director of policy at Americans for Responsible Innovation.

Travis Hall joined the Center for Democracy & Technology as state director.

Apple continues to rejig its Siri team, bringing in new executives Ranjit Desai, Olivier Gutknecht, Nate Begeman and Tom Duffy to fix its AI ambitions.

Elon Musk is stepping back from DOGE. He’ll now spend most of his time on Tesla, he said.

Sam Altman resigned as chairman of nuclear energy company Oklo, with the company saying this could make it easier for it to partner with OpenAI.

Kylie Robison is joining Wired as a senior correspondent covering the business of AI.

Miles Kruppa joined The Information to cover AI funding, with a focus on private equity and sovereign wealth funds.

Best of the rest

Lots of talk this week about how o3 and o4-mini hallucinate more than previous models.

A METR evaluation found that o3 “appears to have a higher propensity to cheat or hack tasks in sophisticated ways in order to maximize its score, even when the model clearly understands this behavior is misaligned with the user’s and OpenAI’s intentions”.

GPT-4.1 also “shows a higher rate of misaligned responses than GPT4o”, according to one researcher.

The Economist has a great piece on AI scheming.

o3 and o4-mini’s performance might suggest that the length of tasks AI models can do is doubling every four months, faster than the seven-month doubling rate METR previously measured.

New Epoch AI research found that “AI supercomputers double in performance every 9 months”.

Dario Amodei said Anthropic wants interpretability to “reliably detect most model problems” by 2027.

Anthropic’s Sam Bowman published a blog framing alignment as “putting up bumpers”, saying we should “treat it as a top priority to implement and test safeguards that allow us to course-correct if we turn out to have built or deployed a misaligned model, in the same way that bowling bumpers allow a poorly aimed ball to reach its target”.

Helen Toner’s excellent new Substack has a piece explaining why AI progress might depend on what domains we can auto-grade answers in, and/or whether models can generalise from auto-graded areas to other domains.

The AI Futures Project published an interesting discussion of responses to its AI 2027 forecast.

Arvind Narayanan and Sayash Kapoor wrote an essay arguing that AI is “normal technology”. Narayanan’s appearance on Hard Fork is worth a listen.

Saffron Huang and Sam Manning advocated for "predistribution" over UBI to address AI-driven inequality.

Miles Brundage and Grace Werner argued that an “‘AI deal’ with China could define global security and [Trump’s] legacy”.

A new paper looked at areas where geopolitical rivals could cooperate on technical AI safety, identifying AI verification mechanisms and shared protocols as potential areas.

A new IMF paper estimates that AI energy demand might drive up US electricity prices by as much as 8.6%, and global carbon emissions by 1.2%.

xAI is reportedly using polluting methane gas turbines without permits at its Memphis Colossus data center. There’s a public hearing with the county health department today.

Anthropic said “users are starting to use frontier models to semi-autonomously orchestrate complex abuse systems that involve many social media bots”.

SemiAnalysis has a detailed look at AMD’s AI ambitions, saying the company’s made progress but still has a ways to go.

OpenAI researcher Kai Chen got denied a green card, sparking a discussion about whether Trump’s immigration policies are hurting America’s AI ambitions.

Kevin Roose is writing a book about AGI.

The Washington Post signed a licensing deal with OpenAI. Ziff Davis sued OpenAI for copyright infringement.

Civitai said it will now ban content depicting incest and self-harm, after pressure from payment processors.

The United Arab Emirates said it will become the first nation to use AI to write laws.

California's state bar admitted that AI was used to develop some bar exam questions.

Anthropic did a publicity push for its AI model welfare work. A good time to revisit my piece on this from last year!

Thanks for reading. Have a great weekend — and remember to apply to work at Transformer!

Thanks for going through the grunt work of summarizing and compiling! Saves me time, I was struggling to fit it all into a NotebookLM-readable file.